HP ProLiant DL380p High-performance computing with accelerated HP ProLiant ser - Page 5

Enablement for HPC in ProLiant servers - quickspecs

|

View all HP ProLiant DL380p manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 5 highlights

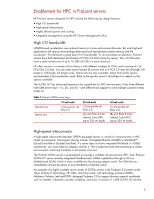

Enablement for HPC in ProLiant servers HP ProLiant servers designed for HPC include the following key design features: High I/O bandwidth High-speed interconnects Highly efficient power and cooling Integrated management using the HP Cluster Management Utility High I/O bandwidth GPGPU-based accelerators use on-board memory to store and process data sets. But most high-end applications still require moving large data and result sets between system memory and the accelerator. This demands a great deal of I/O bandwidth. To accommodate accelerators, ProLiant servers have both dedicated and shared x16 PCIe 2.0 slots (varies by server). The x16 PCIe slots have a data transfer rate of up to 16 GB/s (8 GB/s in each direction). HP offers servers in a variety of form factors, with different numbers of CPUs, and a mixture of x16 PCIe Gen 2.0 slots. You can order some ProLiant DL servers with x16 PCIe 2.0 slots for full-length, fullheight or half-length, full height cards. Option kits are also available. Select ProLiant DL servers accommodate 2-slot accelerator cards. Refer to the specific server's QuickSpecs for details on the options available. The SL390s G7 has enhanced expansion slot capabilities for HPC environments. It has three different half-width server trays-1U, 2U, and 4U-with different slot support to serve multiple customer needs (Table 4). Table 4. ProLiant SL390s server trays Standard slot Internal slots 1U half-width (1) low-profile x16 PCIe 2.0 - 2U half-width (1) low-profile x8 PCIe 2.0 (3) x16 PCIe 2.0 for internal 2-slot GPU (up to 225 W each) 4U half-width (1) low-profile x8 PCIe 2.0 (8) x16 PCIe 2.0 for internal 2-slot GPU (up to 225 W each) High-speed interconnects A high-speed interconnect between GPGPU-equipped servers is critical for communication in HPC cluster environments. Interconnect choices include 10 Gigabit Ethernet (10GbE) or InfiniBand™ (Quad Data Rate or Double Data Rate). If a server does not have integrated InfiniBand or 10GbE connectivity, you must install an adaptor card for it. This is expensive and time-consuming in cluster environments containing hundreds or thousands of servers. The ProLiant SL390s server is purpose-built to provide a scalable infrastructure for HPC. Each ProLiant SL390s G7 server includes integrated InfiniBand and 10GbE capabilities through a LAN on Motherboard (LOM), which is more cost-effective than buying adaptor cards. The LOM lets you immediately connect the server to any InfiniBand or Ethernet switch. An example of a highly scalable server cluster of SL390s servers is the Tsubame 2.0 system at the Tokyo Institute of Technology. HP, NEC, and Tokyo Tech, with technology partners NVIDIA, Mellanox/Voltaire, Intel and DDN, designed Tsubame 2.0 to support diverse research workloads such as weather, informatics, protein modeling, and more. It consists of 1,408 SL390s G7 servers, 5