HP ProLiant 2500 Compaq ProLiant Cluster HA/F100 and HA/F200 Administrator Gui - Page 44

Reducing Single Points of Failure in the HA/F100 Configuration

|

View all HP ProLiant 2500 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 44 highlights

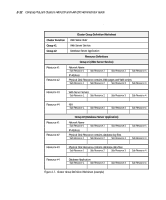

Designing the Compaq ProLiant Clusters HA/F100 and HA/F200 2-13 Use the resource dependency tree concept to review your company's availability needs. It is a useful exercise, directing you to record the exact design and definition of each cluster group. Reducing Single Points of Failure in the HA/F100 Configuration The final planning consideration is reducing single points of failure. Depending on your needs, you may leave all vulnerable areas alone, accepting the risk associated with a potential failure. Or, if the risk of failure is unacceptable for a given area, you may elect to use a redundant component to minimize, or remove, the single point of failure. NOTE: Although not specifically covered in this section, redundant server components (such as power supplies and processor modules) should be used wherever possible. These features will vary based upon your specific server model. The single points of failure described in this section are: s Cluster interconnect s Fibre Channel data paths s Non-shared disk drives s Shared disk drives NOTE: The Compaq ProLiant Cluster HA/F200 addresses the single points of failure listed above with its dual redundant loop configuration. For more information, refer to the "Enhanced High Availability Features of the HA/F200" section of this chapter. Cluster Interconnect The interconnect is the primary means for the cluster nodes to communicate. Intracluster communication is crucial to the health of the cluster. If communication between the cluster nodes ceases, MSCS must determine the state of the cluster and take action, in most cases bringing the cluster groups offline on one of the nodes and failing over all cluster groups to the other node. Following are two strategies for increasing the availability of intracluster communication. Combined, these strategies provide even more redundancy.