HP ProLiant DL288 ISS Technology Update, Volume 7, Number 7 - Page 2

ISS Technology Update

|

View all HP ProLiant DL288 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 2 highlights

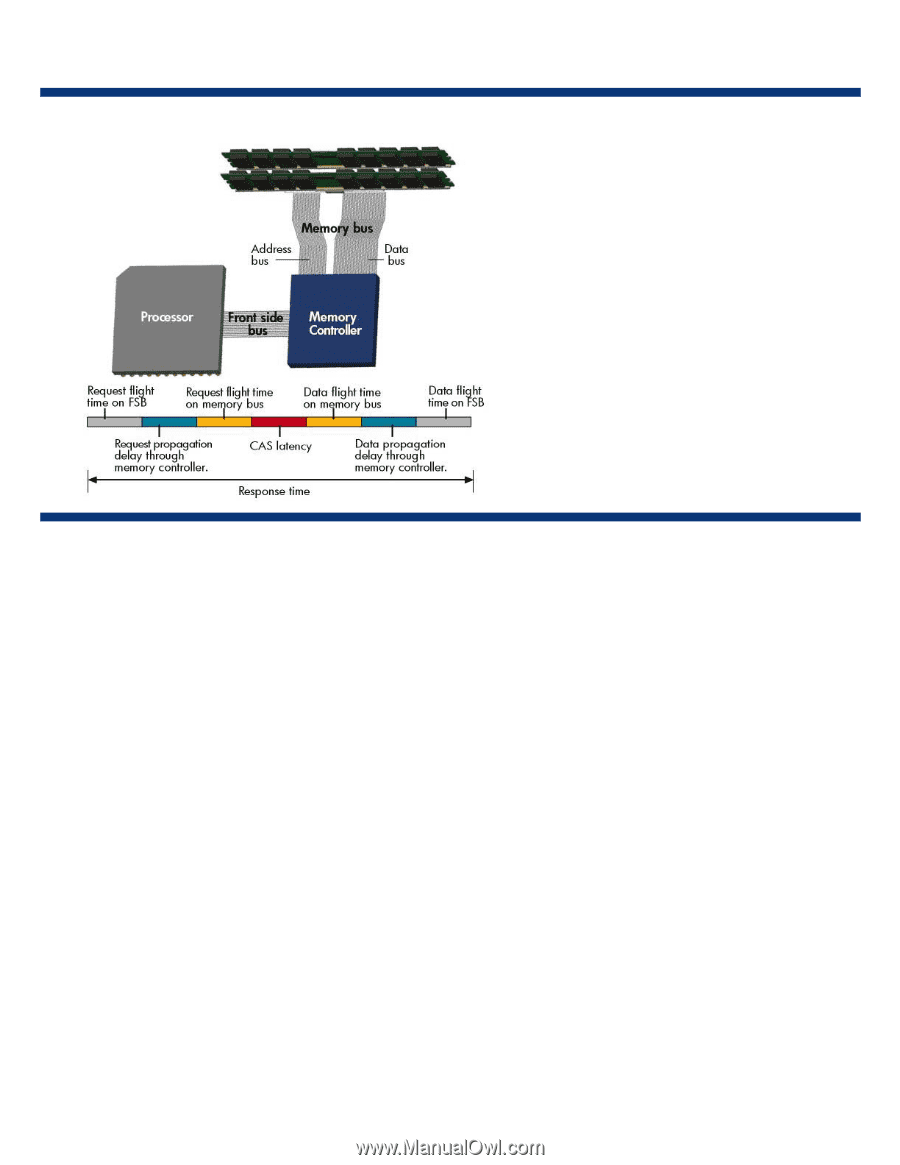

ISS Technology Update Figure 1-1. Response time of the processor's request for data ISS Technology Update Volume 7, Number 7 Keeping you informed of the latest ISS technology Volume 7, Number 7 Memory bandwidth is the theoretical maximum rate (bytes per second) that data can be read from or stored by SDRAM. For standard SDRAM, the bandwidth is calculated by multiplying the width of the memory bus (channel), by the bus frequency in megahertz (in MHz), by the number of channels. For example, the bandwidth of a dual-channel, 64-bit (8-byte), 400-MHz memory bus is 8 bytes × 400 MHz × 2 channels or 6.4 GB/s. This bandwidth is doubled for Double Data Rate (DDR) SDRAM. Some applications, such as high performance computing (HPC), 3D graphics, and multimedia, are sensitive to memory bandwidth because the data is seldom cached. A memory-intensive application running on a server with multi-core processors can saturate the shared memory bus, resulting in degraded performance for all the jobs running on that processor. Other applications, such as databases and online transaction processing (OLTP) are more latency sensitive. Database traffic is sensitive to latency because the delay can represent a much higher percentage of the total response time. For OLTP applications, lower latency means more transactions can be processed. Reducing latency can have a greater impact on performance than increasing the bandwidth because lower latency decreases the processor wait time. On the other hand, high latency can negate the performance benefit of increasing the memory bandwidth.