HP LH4r HP IA-32 Server Long Distance Cluster Interconnect for Windows Phase 1 - Page 4

hp solution overview

|

View all HP LH4r manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 4 highlights

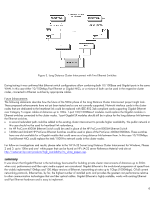

GigaNet GigaNet is a fairly new, high performance system interconnect technology based on emerging VIA industry standards. Implemented in hardware, it eliminates the overhead of the operating system and TCP/IP network stack. Freeing the CPU from focusing on communications minimizes server processor utilization so it can be more dedicated to handling applications. GigaNet better meets low latency requirements than competing Gigabit Ethernet technologies while introducing comparably high bandwidth. At the present time, the Emulex cLAN product line offers PCI Host Adapters and switches with a maximum of 30m connectivity using copper cables. hp solution overview Project Implementation The Gigabit Ethernet technology was selected for testing in the described first phase of the Long Distance Cluster Interconnect project for the 500m range. The factors in favor of this decision were: • Hewlett-Packard no longer supports FDDI PCI adapters for future implementations. • VIA was primarily targeted for use in high performance inter-server communications with distances up to 50 meters. This technology can be monitored for future implementations, as it is being developed. • Gigabit has no disadvantages compared to FDDI technology. • Gigabit Ethernet is based on Ethernet technology so the migration path is relatively painless from an investment and training point of view. • Future Gigabit developments could further enhance the usability of this technology for stretched clusters. Hardware components • HP Netserver LPr servers as cluster nodes with: One Pentium-III, 550MHz CPU and 256MB RAM • Intel Pro/1000-SX Gigabit NIC for cluster interconnect in each node • HP D5013B NIC for public network • HP D5013B NIC for public network in the domain controller and all clients • Intel Pro/1000-SX Gigabit NIC for cluster interconnect in monitor client system (for Windows 2000 configuration only) • 500m, 50um, short wave, multi mode fiber optical cable in the private network segment shown in Figure 2 on the following page • Two 5m short wave multi mode optical fiber cables in the private network segment as shown on Figure 2 • HP ProCurve 8000M Ethernet Switch with three 1-port Gigabit-SX modules for cluster interconnect and two 8-port 10/100Base- TX modules for public network • All other cluster hardware components are the same as those described in the white paper: High Availability MSCS SPOFless Cluster Solution, listed in the References section of this document. Software components • Cluster servers: Windows NT 4.0 Server EE, SP6a or Windows 2000 Advanced Server, SP1 • Clients: Windows NT 4.0 Workstation, SP6a or Windows 2000 Professional, SP1 • Monitor client and domain controller: Windows NT 4.0 Server, SP6a or Windows 2000 Server, SP1 • Driver versions for Intel Pro/1000 Gigabit NIC: NT4.0: 1.17, Windows 2000: 1.39 • Driver versions for HP D5013B NIC: NT4.0: 2.35, Windows 2000: 2.27 • HP ProCurve 8000M Ethernet Switch: Firmware C.07.01, ROM: C.06.01 Test environment The following test suites were applied: • Microsoft HCT 9.5 phase 5 for 24 hours • Intensive storage I/O stress for 48 hours • Fault injections at different points of the cluster to simulate failures and create failover conditions 4