HP ML530 RDMA protocol: improving network performance - Page 3

RDMA solution, File System NFS, Direct Access File System DAFS, and Message Passing Interface MPI.

|

UPC - 720591250669

View all HP ML530 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 3 highlights

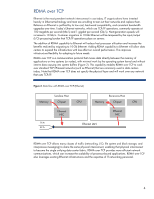

must be transferred in and out of memory several times (Figure 1): received data is written to the device driver buffer, copied into an operating system (OS) buffer, and then copied into application memory space. Figure 1. Typical flow of network data in receiving host Memory NOTE: The actual number of memory copies varies depending on OS (Example: Linux uses 2). Chipset Network I/F CPU These copy operations add latency, consume memory bus bandwidth, and require host processor (CPU) intervention. In fact, the TCP/IP protocol overhead associated with 1 Gb of Ethernet traffic can increase system processor utilization by 20 to 30 percent. Consequently, software overhead for 10 Gb Ethernet operation has the potential to overwhelm system processors. An InfiniBand network using TCP operations to satisfy compatibility issues will suffer from the same processing overhead problems that Ethernet networks have. RDMA solution Inherent processor overhead and constrained memory bandwidth are performance obstacles for networks that use TCP, whether out of necessity (Ethernet) or compatibility (InfiniBand). For Ethernet, the use of a TCP/IP offload engine (TOE) and RDMA can diminish these obstacles. A network interface adapter (NIC) with a TOE assumes TCP/IP processing duties, freeing the host processor for other tasks. The capability of a TOE is defined by its hardware design, the OS programming interface, and the application being run. RDMA technology was developed to move data from the memory of one computer directly into the memory of another computer with minimal involvement from their processors. The RDMA protocol includes information that allows a system to place transferred data directly into its final memory destination without additional or interim data copies. This "zero copy" or "direct data placement" (DDP) capability provides the most efficient network communication possible between systems. Since the intent of both a TOE and RDMA is to relieve host processors of network overhead, they are sometimes confused with each other. However, the TOE is primarily a hardware solution that specifically takes responsibility of TCP/IP operations, while RDMA is a protocol solution that operates at the upper layers of the network communication stack. Consequently, TOEs and RDMA can work together: a TOE can provide localized connectivity with a device while RDMA enhances the data throughput with a more efficient protocol. For InfiniBand, RDMA operations provide an even greater performance benefit since InfiniBand architecture was designed with RDMA as a core capability (no TOE needed). RDMA provides a faster path for applications to transmit messages between network devices and is applicable to both Ethernet and InfiniBand. Both these interconnects can support all new and existing network standards such as Sockets Direct Protocol (SDP), iSCSI Extensions for RDMA (iSER), Network File System (NFS), Direct Access File System (DAFS), and Message Passing Interface (MPI). 3