HP ML530 RDMA protocol: improving network performance - Page 9

RDMA over InfiniBand, 4x InfiniBand Link

|

UPC - 720591250669

View all HP ML530 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 9 highlights

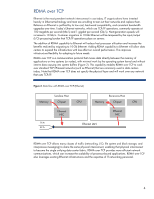

RDMA over InfiniBand InfiniBand is a high-performance, low-latency alternative to Ethernet. InfiniBand architecture uses a switched-fabric, channel-based design that adapts well to distributed-computing environments where performance, infrastructure simplification, and convergence of component interconnects are key design goals. A data center employing an InfiniBand infrastructure is less complex and easier to manage. Like Ethernet, InfiniBand architecture uses multi-layer processing to transfer data between nodes. Each InfiniBand node contains a host or target channel adapter (HCA or TCA) that connects to an InfiniBand network through a bi-directional serial link. However, InfiniBand architecture allows links to have multiple channel pairs (4x being the current typical implementation), with each channel handling virtual lanes of multiplexed data at a 2.5 Gbps single data rate (SDR). Additional nodes and channels (up to 12x) and faster signaling (up to quad data rate) increase the bandwidth of a link, making an InfiniBand infrastructure scalable with system expansion and capable of a bandwidth from 2.5 to 120 Gbps in each direction (although processor overhead and server I/O bus architectures may lower usable bandwidth by 10 percent or more). RDMA data transactions over InfiniBand (Figure 5) occur basically the same way as described for Ethernet and offer the same advantages. However, InfiniBand uses a communications stack designed to support RDMA as a core capability and therefore provides greater RDMA performance than Ethernet. Figure 5. RDMA data flow over InfiniBand Memory Sending Host Chipset Ch 1 TX/RX Prs Ch 2 TX/RX Prs Ch 3 TX/RX Prs Ch 4 TX/RX Prs IB HCA Receiving Host CPU Memory Chipset CPU IB HCA 4x InfiniBand Link An InfiniBand HCA is similar to an Ethernet NIC in operation but uses a different software architecture. The InfiniBand HCA uses a separate communications stack usually provided by the HCA manufacturer or by a third-party software vendor. Therefore, operating an HCA requires prior loading of both the software driver and the communications stack for the specific operating system. The majority of existing InfiniBand clusters run on Linux. Drivers and communications stacks are also available for Microsoft® Windows®, HP-UX, Solaris, and other operating systems from various hardware and software vendors. Note that specific feature support varies among operating systems and vendors. 9