HP Cluster Platform Introduction v2010 Microsoft Windows Compute Clusters - Page 16

Understanding Maximum System Limits, 2.4 Identifying Host Channel Adapters and Firmware

|

View all HP Cluster Platform Introduction v2010 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 16 highlights

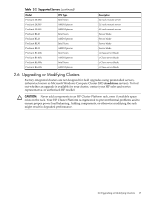

13 Gigabit Ethernet system interconnect, if present. 14 Gigabit Ethernet switch for cluster administrative network, if present. 15 InfiniBand system interconnect, if present. 2.3 Understanding Maximum System Limits In this release of Microsoft Windows Compute Cluster 2003, supported HP Cluster Platform configurations are constrained as follows: • HP Cluster Platform Express is constrained to clusters ranging from 5 to 33 nodes. • HP Cluster Platform is constrained to clusters ranging from 5 to 512 nodes for CP3000 and CP4000 models. Models employing blade and c-Class blade servers are constrained to 1024 nodes. • InfiniBand interconnect single data rate (SDR) and double data rate (DDR). 2.4 Identifying Host Channel Adapters and Firmware Every cluster node that is part of the high-speed InfiniBand fabric requires a host channel adapter (HCA) installed in its PCI bus. The HCA is always installed in a specific PCI slot on an unshared bus of the appropriate speed, ensuring system performance. For this release, the supported HCA models are specified in Table 2-1. Single-port InfiniBand cards are also supported. Note: For instructions on upgrading the HCA firmware on Microsoft Windows Compute Clusters, refer to the Voltaire HCA 4X0 User Manual for Windows GridStack. . Table 2-1 Supported HCA Cards Model 380299-B21: InfiniBand 4X 380298-B21: InfiniBand 4X 409377-B21: InfiniBand 4X PCI Bus Type PCI-X PCI-Express PCI-Express Description Dual-port card with 128 MB attached memory Dual-port with 128 MB attached memory HP HPC 4X DDR IB Mezzanine HCA for HP BladeSystem c-Class For information on supported firmware and stack revisions, see the HP Cluster Platform InfiniBand Fabric Management and Diagnostic Guide. You can download the latest information about supported firmware revisions from http://www.docs.hp.com/en/highperfcomp.html. For mor information, see Chapter 4, which describes the GridStack™ InfiniBand stack. 2.5 Identifying the Servers Models Supported as Nodes HP Cluster Platform hardware utilizes several CPU architectures and supports many models of HP server. Table Table 2-2 defines the servers that are supported in cluster platform under Microsoft Windows Compute Cluster 2003. Table 2-2 Supported Servers Model ProLiant DL140 ProLiant DL145 ProLiant DL360 ProLiant DL365 CPU Type Intel Xeon AMD Opteron Intel Xeon AMD Opteron Description 1U rack-mount server 1U rack-mount server 1U rack-mount server 1U rack-mount server 16 Understanding Supported Cluster Configurations