HP InfiniBand FDR 2-port 545M Converged Networks and Fibre Channel over Ethern - Page 5

Direct-attach storage, Storage Area Network, iSCSI: SANs using TCP/IP networks, Cluster interconnects

|

View all HP InfiniBand FDR 2-port 545M manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 5 highlights

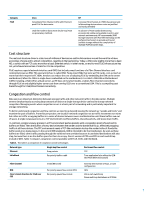

more connections for increased performance. Smaller servers, especially blade servers, have few option slots, and the Fibre Channel host bus adapters (HBAs) add noticeably to server cost. Therefore FCoE is a compelling choice for the first network hop in such servers. HP ProLiant BL G7 and Gen8 blade servers have embedded CNAs, called FlexFabric adapters, which eliminate some hardware costs associated with separate network fabrics. Dual-port, multifunction FlexFabric adapters are both Ethernet and host bus adapters, depending on user configuration. These embedded CNA adapters leave the BladeSystem PCIe mezzanine card slots available for additional storage or data connections. Direct-attach storage While Direct Attached Storage (DAS) does not offer an alternative to converged networking, it's important to acknowledge that a great deal of the storage sold today is DAS and that will continue to be the case. HP Virtual Connect direct-attach Fibre Channel for 3PAR Storage Solutions with Flat SAN technology can be a significant way to more effectively use the single hop FCoE already deployed within existing network infrastructures. See the "Using HP Flat SAN technology to enhance single hop FCoE" section later in this paper to learn more. Storage Area Network Fibre channel is the storage fabric of choice for most enterprise IT infrastructures. Until now, Fibre channel required an intermediate SAN fabric to create your storage solution. However, this fabric can be expensive and complex. Converged networks using FCoE have the potential to change these requirements in a way that lowers costs and complexity in the IT infrastructure. iSCSI: SANs using TCP/IP networks iSCSI infrastructures further demonstrates the desirability of network convergence with respect to cost savings. Two common deployments are the end-to-end iSCSI solution, typically found in entry to midrange network environments, and iSCSI frontends to Fibre Channel storage in large enterprise environments. In the latter scenario, the FCoE benefit is that, from server and SAN perspective, it's all Fibre Channel traffic and doesn't go through translation. The FCoE solution means less overhead and easier debugging, resulting in significant cost savings. Cluster interconnects Cluster interconnects are special networks designed to provide low latency (and sometimes high bandwidth) for communication between pieces of an application running on two or more servers. The way application software sends and receives messages typically contributes more to the latency than a small network fabric. As a result, engineering of the end-to-end path is more important than the design detail of a particular switch ASIC. Cluster interconnects are used in supercomputers and historically have been important to the performance of parallel databases. More recently, trading applications in the financial services industry and other high-performance computing (HPC) applications have made extensive use of InfiniBand. Over the next few years, 10 and 40 Gigabit DCB Ethernet will become more common and less expensive. RoCEE NICs (NICs supporting InfiniBand-like interfaces) also will become available. As a result, half of the current cluster interconnect applications may revert to Ethernet, leaving only the most performance sensitive supercomputers and applications to continue running separate cluster networks. Approaches to converged data center networks As the industry continues its efforts to converge data center networks, various engineering tradeoffs become necessary. Different approaches may in fact be better for different environments, but in the end the industry needs to focus on a single approach to best serve its customers. Table 1 examines how current protocol candidates compare for converging data center networks in different network environments. 5