HP InfiniBand FDR 2-port 545M Converged Networks and Fibre Channel over Ethern - Page 7

Cost structure, Congestion and flow control, Fibre Channel

|

View all HP InfiniBand FDR 2-port 545M manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 7 highlights

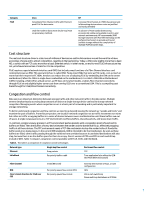

Category FCoE Cisco Consolidate Fibre Channel traffic with Ethernet / push FCoE for data center Lead the market in data center (multi-hop) FCoE via proprietary methods HP Converge Fibre Channel at c7000 chassis level and in Networking devices where costs are justified and easily defended In advance of FCoE standards becoming economically viable and available in end-to-end network environments, HP recommends 3PAR Storage Solutions with Flat SAN technology as the preferred mechanism to provide end to end scalable storage without the complexities of multiple FC/FCoE switch hops. Cost structure The real cost structure driver is scale: tens of millions of devices are sold in data centers around the world to achieve economies of scale and to attract competition, resulting in the best pricing. Today, a CNA costs slightly more than a basic NIC; a switch with an FCF costs more than a basic Ethernet switch. In other words, an end-to-end FCoE infrastructure has not yet achieved economies of scale for it to be competitive. FCoE requires a special network interface card (NIC) that includes most functions of a Fibre Channel interface card commonly known as HBA. This special interface is called CNA. Today most CNAs that exist as PCIe cards, cost as much or more than their respective FC HBAs. Vendors can reduce the cost of adopting FCoE by embedding the CNA on the server motherboard, When the CNA is a single device embedded on the motherboard, it is called a CNA LAN on Motherboard (LOM). Including a CNA LOM on a blade server, as HP does on its latest models, enables Fibre Channel connectivity through FCoE at only slightly higher server cost than running iSCSI over a conventional LOM. This is a compelling cost breakthrough for FCoE/Fibre Channel connectivity. Congestion and flow control Data size is an important distinction between storage traffic and other network traffic in the data center. Multiple servers simultaneously accessing large amounts of data on a single storage device can lead to storage network congestion. Managing events when congestion occurs is a basic part of networking and is particularly important for storage networking. To better understand congestion and flow control, we need to go beyond viewing the network as "speeds and feeds" and examine the pool of buffers. From this perspective, we visualize network congestion not as traffic movement over wires but rather as traffic occupying buffers in a series of devices between source and destination until those buffers run out of space. A single runaway process in a TCP environment overflows buffers, discards packets, and slows down traffic. In contrast, a single runaway process in a PFC environment pushes packets until a congestion point is found and its buffers are filled. Then the buffers of every device between the sender and the bottleneck fill up, effectively stopping traffic at all those devices. The PFC environment needs a TCP-like mechanism to stop the sender before it floods the buffer pools in so many places. In the current DCB standards, QCN is intended to be that mechanism. As soon as these buffers are filled, other traffic passing through the switches from unrelated sources to unrelated destinations will also stop, because there is no free buffer space for them to occupy. Cisco's version of PFC uses FCFs in each switch hop instead of QCN. Table 3 compares congestion control available with FCoE, iSCSI, and InfiniBand. Table 3. This table is a comparison of congestion control technologies Network type Single hop flow control End to end flow control Ethernet Drop packets TCP InfiniBand Per priority buffer credit Tune application (also offers the QCNlike FECN/ BECN mechanism) Fibre Channel DCB Cisco's latest direction for FCoE over CEE Credit (BB Credit) Per priority pause flow control (PFC) Per priority pause flow control Severely limit number of hops, or tune configuration QCN FCF in each switch hop Same multi-hop limitations as FC SANs 7