Dell PowerEdge External Media System 1434 Improving NFS performance on HPC clu - Page 11

I/O cluster details, Table 5., Two Mellanox M4001F FDR10 I/O modules per chassis

|

View all Dell PowerEdge External Media System 1434 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 11 highlights

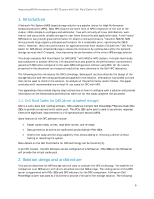

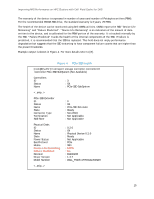

Improving NFS Performance on HPC Clusters with Dell Fluid Cache for DAS Table 5. I/O cluster details I/O cluster configuration CLIENTS CHASSIS CONFIGURATION INFINIBAND FABRIC For I/O traffic 64 PowerEdge M420 blade servers 32 blades in each of two PowerEdge M1000e chassis Two PowerEdge M1000e chassis, each with 32 blades Two Mellanox M4001F FDR10 I/O modules per chassis Two PowerConnect M6220 I/O switch modules per chassis Each PowerEdge M1000e chassis has two Mellanox M4001 FDR10 I/O module switches. Each FDR10 I/O module has four uplinks to a rack Mellanox SX6025 FDR switch for a total of 16 uplinks. The FDR rack switch has a single FDR link to the NFS server. ETHERNET FABRIC For cluster deployment and management Each PowerEdge M1000e chassis has two PowerConnect M6220 Ethernet switch modules. Each M6220 switch module has one link to a rack PowerConnect 5224 switch. There is one link from the rack PowerConnect switch to an Ethernet interface on the cluster master node. CLIENT I/O compute node configuration PowerEdge M420 blade server PROCESSORS Dual Intel(R) Xeon(R) CPU E5-2470 @ 2.30 GHz MEMORY 48 GB. 6 * 8 GB 1600 MT/s RDIMMs INTERNAL DISK 1 50GB SATA SSD INTERNAL RAID CONTROLLER PERC H310 Embedded CLUSTER ADMINISTRATION INTERCONNECT Broadcom NetXtreme II BCM57810 I/O INTERCONNECT Mellanox ConnectX-3 FDR10 mezzanine card I/O cluster software and firmware BIOS 1.3.5 iDRAC 1.23.23 (Build 1) OPERATING SYSTEM Red Hat Enterprise Linux (RHEL) 6.2 KERNEL 2.6.32-220.el6.x86_64 11