HP Cluster Platform Interconnects v2010 Using InfiniBand for a Scalable Comput - Page 3

InfiniBand technology, InfiniBand architecture

|

View all HP Cluster Platform Interconnects v2010 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 3 highlights

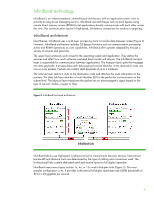

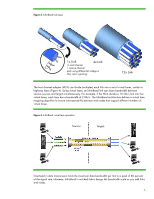

InfiniBand technology InfiniBand is an industry-standard, channel-based architecture with an application-centric view to provide an easy-to-use messaging service. InfiniBand uses techniques such as stack bypass using remote direct memory access (RDMA) to let applications directly communicate with each other across the wire. This communication results in high-speed, low-latency connections for scale-out computing. InfiniBand architecture Like Ethernet, InfiniBand uses a multi-layer processing stack to transfer data between nodes (Figure 2). However, InfiniBand architecture includes OS-bypass functions such as communication processing duties and RDMA operations as core capabilities. InfiniBand offers greater adaptability through a variety of services and protocols. The upper layer protocols work closest to the operating system and application. They define the services and affect how much software overhead data transfer will require. The InfiniBand transport layer is responsible for communication between applications. The transport layer splits the messages into data payloads. It encapsulates each data payload and an identifier of the destination node into one or more packets. Packets can contain data payloads of up to 4 kilobytes. The network layer selects a route to the destination node and attaches the route information to the packets. The data link layer attaches a local identifier (LID) to the packet for communication at the subnet level. The physical layer transforms the packet into an electromagnetic signal based on the type of network media-copper or fiber. Figure 2. InfiniBand functional architecture InfiniBand Link InfiniBand fabrics use high-speed, bi-directional serial interconnects between devices. Interconnect bandwidth and distance limits are determined by the type of cabling and connections used. The bi-directional links contain dedicated send and receive lanes for full duplex operation. InfiniBand interconnect types include 1x, 4x, or 12x wide full-duplex links (Figure 3). The most popular configuration is 4x. It provides a theoretical full-duplex quad data rate (QDR) bandwidth of 80 (2 x 40) gigabits per second. 3