HP Cluster Platform Interconnects v2010 Using InfiniBand for a Scalable Comput - Page 9

To meet extreme density goals, the half-height HP BL2x220c server blade includes two server nodes.

|

View all HP Cluster Platform Interconnects v2010 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 9 highlights

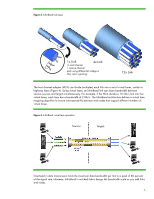

To meet extreme density goals, the half-height HP BL2x220c server blade includes two server nodes. Each node can support two quad-core Intel® Xeon® 5400-series processors and a slot for a mezzanine board. That equals up to 32 nodes (256 cores) per c7000 enclosure. Each c7000 enclosure contains two HP 4x QDR InfiniBand Switch Blades. Figure 8 shows how using the dual-node BL2x220c blade lets you deploy 576 nodes in half as much rack space. Figure 8. HP BladeSystem c-Class 576-node cluster configuration using BL2x220c blades HP c7000 Enclosure #1 16 HP BL2x220c server blades w/4x QDR HCAs 16 HP QDR IB Switch Blade 16 HP QDR IB Switch Blade HP c7000 Enclosure #2 16 HP BL2x220c server blades w/4x QDR HCAs 16 HP QDR IB Switch Blade 16 HP QDR IB Switch Blade HP c7000 Enclosure #18 16 HP BL2x220c server blades w/4x QDR HCAs 16 HP QDR IB Switch Blade 16 HP QDR IB Switch Blade 36-Port QDR IB Switch #1 36-Port QDR IB Switch #2 36-Port QDR IB Switch #16 Total nodes Racks required for servers Interconnect 576 (2 per blade) Four 42U, one 36U (assumes four c7000 enclosures per rack) 1:1 full bandwidth (non-blocking), 3 switch hops maximum, fabric redundancy 9