Dell PowerEdge C4140 Deep Learning Performance Comparison - Scale-up vs. Scale - Page 22

Software Stack, PowerEdge Servers, Non-Dell EMC Servers

|

View all Dell PowerEdge C4140 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 22 highlights

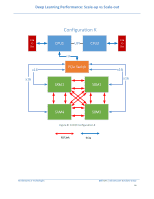

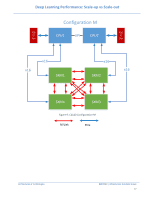

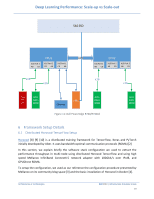

Deep Learning Performance: Scale-up vs Scale-out In Figure 14 we see how the GPU memory is accessed directly instead of copying the data n times across the system components with the use of GPUDirect RDMA, this feature is reflected directly in the throughput performance of the server. Figure 14: Nvidia GPU Direct RDMA Connection. Source: https://www.sc-asia.org 6.2 Evaluation Platform Setup Table 4 shows the software stack configuration used to build the environment to run the tests. Software Stack PowerEdge Servers Non-Dell EMC Servers OS Kernel nvidia driver Open MPI CUDA cuDNN NCCL Docker Container Container Image - Single Node Container Image - Multi Node Benchmark scripts Test Date - V1 Test Date - V2 Ubuntu 16.04.4 LTS GNU/Linux 4.4.0-128-generic x86_64 396.26 for all servers 390.46 for R740-P40 3.0.1 9.1.85 7.1.3.16 2.2.15 NVidia TensorFlow Docker TensorFlow/tensorflow:nightly-gpu-py3 Horovod : latest tf_cnn_benchmarks April-June 2018 Jan 2019 Table 4: OS & Driver Configurations Ubuntu 16.04.3 LTS GNU/Linux 4.4.0-130-generic x86_64 384.145 3.0.0 9.0.176 7.1.4 2.2.13 Nvidia TensorFlow Docker nvcr.io/nvidia/tensorflow:18.06-py3 n/a tf_cnn_benchmarks July 2018 NA Architectures & Technologies Dell EMC | Infrastructure Solutions Group 21