Compaq ProLiant 1000 Compaq Backup and Recovery for Microsoft SQL Server 6.X - Page 44

Server database backup functionality: ARCserve 6.0 for Windows NT, the ARCserve Backup Agent

|

View all Compaq ProLiant 1000 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 44 highlights

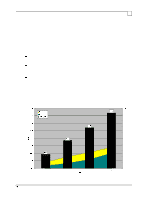

Compaq Backup and Recovery for Microsoft SQL Server 6.x Page 44 databases to two different drives the combined throughput is 74 GB/hr - about a 4x increase. Another way to look at this is to consider that dumping four databases concurrently will take only about one-fourth as long as dumping them sequentially (back-to-back). Furthermore, by doing enough concurrent operations to DLT tape, the overall throughput reaches a rate (74 GB/hr) higher than what we've seen before. The hardware utilization at this point is around 26% CPU and 36% PCI, so that bandwidth yet remains in the system for additional jobs. Since concurrent dumps involve multiple databases, each of which can reside on the same or different disk controllers, we are no longer bound by the read rate of a single controller and so achieve a very high overall throughput. Furthermore, since each dump operation is separate and has its own dedicated I/O devices (disk array and tape drive), the operations are truly parallel and display near-linear scalability (increase in performance). Finally, note that concurrent dumps can also be done as striped dumps, so that SQL Server is dumping multiple databases with each database dump writing to multiple tape drives. Several different combinations of drives-to-databases could be possible for such "concurrent, striped dumps". For example, in the above system we replaced the four 35/70-GB DLT drives with twelve 15/30-GB DLT drives and configured backup 'stripe sets' of three tape drives for each database. When all four databases were dumped concurrently, an overall throughput exceeding 50 GB/hr was observed. The analysis done in this section will involve the use of 'third party' products to supplement the SQL Server database backup functionality: ARCserve 6.0 for Windows NT, the ARCserve Backup Agent for SQL Server option, and the ARCserve RAID option. Details about the features and operational characteristics of these products were covered in an earlier section: Online Backup Considerations with ARCserve for Windows NT. All backup tests discussed in this section were performed by sending data from an online SQL Server database to 15/30-GB DLT tape drives on same (local) server. As with previous tests, no user transaction load is occurring on the database being backed up, or on any other database on the system. The ARCserve applications act as a 'go-between' from SQL Server to the tape subsystem. For these types of operations utilizing ARCserve to backup databases to multiple tape drives, the following considerations were taken into account: The database was contained on a RAID-5 disk array, using a single Smart-2/P controller (both SCSI ports) which resided on the primary PCI bus of the Proliant 5000. All of the DLT drives resided in Compaq DLT Tape Array cabinets (4 drives per cabinet). Each cabinet was cabled to 2 controllers (the embedded Fast-Wide SCSI-2/P controller or additional Wide-Ultra SCSI-2/P cards), so that each controller interfaced to 2 drives. Up to 8 tape drives and 4 controllers were used in the testing. All tape drives are grouped into a single 'array' using the ARCserve RAID Option. Tests were conducted using from 1 to 8 tape drives in a RAID-0 array, and then with 3 to 8 tape drives in a RAID-5 (fault tolerant) array. Compression was enabled on the tape drives, providing as much as a 15% increase in throughput vs. non-compressed. A database containing data that lends itself differently to compression could yield throughput higher or lower than that displayed below, although such differences become less apparent as more DLT drives are striped together to form a single high-speed tape array. Using the above guidelines, performance was measured while monitoring processor usage and PCI bus usage (avg. of 2 PCI). The throughput results for all tests, along with the hardware usage percentages for the RAID-0 tests, are displayed in the following chart: