Compaq ProLiant 1000 Compaq Backup and Recovery for Microsoft SQL Server 6.X - Page 74

Compaq Backup and Recovery for Microsoft SQL Server 6.x, syslogs

|

View all Compaq ProLiant 1000 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 74 highlights

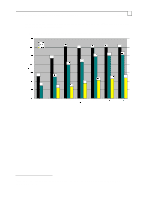

Compaq Backup and Recovery for Microsoft SQL Server 6.x Page 74 The page reinitialization phase of the load (chart 16) is generally faster with all types of arrays than the initial phase. Although both processes employ 16 KB writes to the data array, the reinitialization is a purely sequential operation (which translates to less head seek times on the disk drives), and does not need to fetch data from the tape drives. As with most sequential operations, performance does not continue to increase beyond a certain number of spindles in the array. Using charts 15 and 16, we can estimate the total amount of time that is needed to load a database from a database dump. Since a full database dump image consists of both the database and its transaction log, we must account for the time consumed by 4 separate process, listed in order of operation as follows: 1. The restore data pages in the database, 2. The restore of pages in the syslogs table (the transaction log), 3. Reinitializaiton of unused pages in the database, and 4. Reinitialization of unused log pages. The charts above apply to both data and log restores (meaning log restoration done as part of the LOAD DATABASE command). However, since the array used to house the transaction log may differ from that used to hold the database, it will be necessary to use one of three possible methods: If both the data and log parts of the load will be limited by the throughput of the system (tape drives, network link, etc.), then the size of the transaction log can be added to the size of the database, and the total size divided by the throughput of the system to find the restore times. Only the reinitialization rates are considered separately: [(db_size + log_size) / system_throughput] + [db_free_space / db_reinit_rate] + [log_free_space / log_reinit_rate] = load_time The exception here would be when the transaction log space yields compression significantly different from the data space. If the data load will be limited by the throughput of the system, and the log load will be limited by the write-throughput of the log volume, then the rate at which the log can be restored must be considered separately. The reinitialization rates are also considered separately. [db_size / system_throughput] + [log_size / log_volume_throughput] + [db_free_space / db_reinit_rate] + [log_free_space / log_reinit_rate] = load_time If both the data and log parts of the load will be limited by the write-throughputs of their respective volumes, then the rate at which each can be restored must be considered independantly. The reinitialization rates are also considered separately. [db_size / db_volume_throughput] + [log_size / log_volume_throughput] + [db_free_space / db_reinit_rate] + [log_free_space / log_reinit_rate] = load_time The system throughput (in Gb/hr) for various configurations can be obtained from the charts in previous sections, as the actual performance of the storage interface (tape drives or network link) is generally the same while storing data as when retrieving it (the exception is disk storage) . Data or Log volume throughput limitations (in GB/hr) are shown in Chart-15. Reinitialization rates are shown in Chart-16. The size of your data and log spaces as well as the unused amounts in each (all in GB) should be obtained using one of the methods discussed in 'Online Backup Considerations with SQL Server Dump'. Time (in hrs) can then be estimated using the above formulae. Note that if the database and transaction log reside on the same disk volume, then these formulae can be simplified by considering the log as part of the database. Example: A full database backup was performed on a database with 27.3 GB of used data space, and 850 MB of used transaction log space, using SQL Server dump92. This backup set now needs to be 92 Using SQL Enterprise Manager it is possible to determine the total size of the backup image on tape, but it is not possible to determine how much of that space was for data and how much for the log. Thus, it is advisable that the user label his or her tapes with this information.