HP Cluster Platform Express v2010 Workgroup System and Cluster Platform Expres - Page 21

Example InfiniBand Cable Routing for CPE BladeSystem with c7000 Enclosure, Configurations

|

View all HP Cluster Platform Express v2010 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 21 highlights

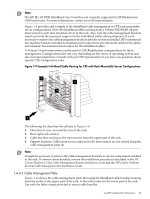

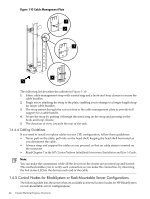

Figure 1-6 Example InfiniBand Cable Routing for CPE BladeSystem with c7000 Enclosure Configurations 7 3 2 1 6 4 5 The following list describes the callouts in Figure 1-6: 1. c-Class enclosure in the bottom of a cabinet. 2. Second c-Class enclosure in a cabinet (there is a maximum of three enclosures in a 42U rack). 3. c-Class cable management bracket. 4. 24-node cable management bracket. 5. Direction of view, facing the rear of the cabinet. 6. InfiniBand cable routed through a 24-node cable management bracket. This goes to the cable management plate and then on to the 4X DDR InfiniBand modules in the c-Class enclosures. 7. Cable management plate. Figure 1-7 shows the optional HP 4X DDR InfiniBand module being installed in a c-Class (c7000 shown) enclosure. CPE configurations are shipped with all of the modules installed, and this figure is provided for reference purposes only. The optional 4X DDR InfiniBand module is also available for CP Workgroup System configurations. The HP 4X DDR InfiniBand Mezzanine HCA (not shown) is a single-port 4X DDR InfiniBand PCI-Express mezzanine adapter for HP c-Class server blades. The HP 4X DDR IB mezzanine HCA can be plugged into PCI-Express connectors on HP c-Class server blades; however, to obtain the best performance, it is necessary to plug the HCA into the x8 PCI-Express connector on the server blades. A subnet manager is required to establish an InfiniBand fabric. For HPC configurations, Cluster Platform requires a Voltaire Grid Switch that is internally managed to provide the subnet management service. You can also use OpenSM from OpenFabric Enterprise Distribution to establish an InfiniBand fabric. 1.4 CPE Architecture Overview 21