Intel SE7525GP2 Product Specification - Page 41

Integrated Memory Initialization Engine, 5.5.5, DIMM Sparing Function - issues

|

View all Intel SE7525GP2 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 41 highlights

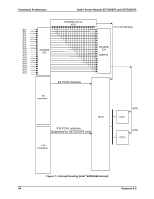

Intel® Server Boards SE7320SP2 and SE7525GP2 Functional Architecture Note that any given read request will only be retried a single time on behalf of this error detection mechanism. If the uncorrectable error is repeated it will be logged and escalated as directed by device configuration. In the memory mirror mode, the retry on an uncorrectable error will be issued to the mirror copy of the target data, rather than back to the devices responsible for the initial error detection. This has the added benefit of making uncorrectable errors in DRAM fully correctable unless the same location in both primary and mirror happens to be corrupt (statistically very unlikely). This RASUM feature may be enabled and disabled via configuration. 3.5.5.4 Integrated Memory Initialization Engine The Intel® E7320 and Intel E7525 MCHs provide hardware managed ECC auto-initialization of all populated DRAM space under software control. Once internal configuration has been updated to reflect the types and sizes of populated DIMM devices, the MCH will traverse the populated address space initializing all locations with good ECC. This not only speeds up the mandatory memory initialization step, but also frees the processor to pursue other machine initialization and configuration tasks. Additional features have been added to the initialization engine to support high speed population and verification of a programmable memory range with one of four known data patterns (0/F, A/5, 3/C, and 6/9). This function facilitates a limited, very high speed memory test, and provides a BIOS-accessible memory zeroing capability for use by the operating system. 3.5.5.5 DIMM Sparing Function To provide a more fault tolerant system, the Intel® E7320 MCH and Intel E7525 MCH include specialized hardware to support fail-over to a spare DIMM device in the event that a primary DIMM in use exceeds a specified threshold of runtime errors. One of the DIMMs installed per channel will not be used, but kept in reserve. In the event of significant failures in a particular DIMM, it and its corresponding partner in the other channel (if applicable), will, over time, have its data copied over to the spare DIMM(s) held in reserve. When all the data has been copied, the reserve DIMM(s) will be put into service and the failing DIMM will be removed from service. Only one sparing cycle is supported. If this feature is not enabled, then all DIMMs will be visible in normal address space. Note: DIMM Sparing feature requires that the spare DIMM be at least the size of the largest primary DIMM in use. Hardware additions for this feature include the implementation of tracking register per DIMM to maintain a history of error occurrence, and a programmable register to hold the fail-over error threshold level. The operational model is straightforward: set the fail-over threshold register to a non-zero value to enable the feature, and if the count of errors on any DIMM exceeds that value, fail-over will commence. The tracking registers themselves are implemented as "leaky buckets," such that they do not contain an absolute cumulative count of all errors since poweron; rather, they contain an aggregate count of the number of errors received over a running time period. The "drip rate" of the bucket is selectable by software, so it is possible to set the threshold to a value that will never be reached by a "healthy" memory subsystem experiencing the rate of errors expected for the size and type of memory devices in use. The fail-over mechanism is slightly more complex. Once fail-over has been initiated the MCH must execute every write twice; once to the primary DIMM, and once to the spare. (This Revision 4.0 29