Dell PowerConnect W Clearpass 100 Software 3.7 Deployment Guide - Page 358

Recovering From a Failure, Recovering From a Temporary Outage, temporary outage, hardware failure

|

View all Dell PowerConnect W Clearpass 100 Software manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 358 highlights

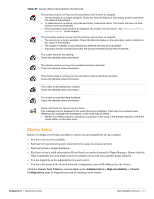

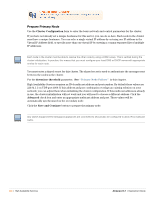

The maintenance commands that are available on this page will depend on the current state of the cluster as well as which node you are logged into. Some maintenance commands are only available on the secondary node. Other commands may change the active state of the cluster. For this reason it is recommended that cluster maintenance should only be performed by logging into a specific node in the cluster using its IP address. Recovering From a Failure From a cluster maintenance perspective, there are two kinds of failure: A temporary outage is an event or condition that causes the cluster to failover to the secondary node. Clearing the condition allows the cluster's primary node to resume operations in essentially the same state as before the outage. A hardware failure is a fault that to correct requires rebuilding or replacing one of the nodes of the cluster. The table below lists some system failure modes and the corresponding cluster maintenance that is required. Table 28 Failure Modes Failure Mode Maintenance Software failure - system crash, reboot or hardware reset Temporary outage Power failure Temporary outage Network failure - cables or switching equipment Temporary outage Network failure - appliance network interface Hardware failure Hardware failure - other internal appliance hardware Hardware failure Data loss or corruption Hardware failure Recovering From a Temporary Outage Use this procedure to repair the cluster and return to a normal operating state: 1. This procedure assumes that the primary node has experienced a temporary outage, and the cluster has failed over to the secondary node. 2. Ensure that the primary node and the secondary node are both online. 3. Log into the secondary node. (Due to failover, this node will be assigned the cluster's virtual IP address.) 4. Click Cluster Maintenance, and then click the Recover Cluster command link. 5. A progress meter is displayed while the cluster is recovered. The cluster's virtual IP address will be temporarily unavailable while the recovery takes place. 358 | High Availability Services Amigopod 3.7 | Deployment Guide