HP MSA 1040 HP MSA 1040 SMU Reference Guide (762784-001, March 2014) - Page 121

Using Remote Snap to replicate volumes, About the Remote Snap replication feature

|

View all HP MSA 1040 manuals

Add to My Manuals

Save this manual to your list of manuals |

Page 121 highlights

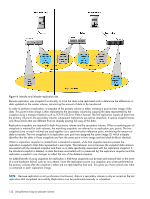

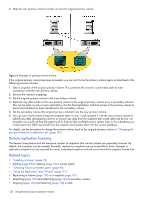

6 Using Remote Snap to replicate volumes About the Remote Snap replication feature Remote Snap is a licensed feature for disaster recovery. This feature performs asynchronous (batch) replication of block-level data from a volume on a local storage system to a volume that can be on the same system or on a second, independent system. This second system can be located at the same site as the first system or at a different site. A typical replication configuration involves these physical and logical components: • A host connected to a local storage system, which is networked via FC or iSCSI ports to a remote storage system as described in installation documentation. • Remote system. A management object on the local system that enables the MCs in the local system and in the remote system to communicate and exchange data. • Replication set. Associated master volumes that are enabled for replication and that typically reside in two physically or geographically separate storage systems. These volumes are also called replication volumes. • Primary volume. The volume that is the source of data in a replication set and that can be mapped to hosts. For disaster recovery purposes, if the primary volume goes offline, a secondary volume can be designated as the primary volume. The primary volume exists in a primary vdisk in the primary system. • Secondary volume. The volume that is the destination for data in a replication set and that is not accessible to hosts. For disaster recovery purposes, if the primary volume goes offline, a secondary volume can be designated as the primary volume. The secondary volume exists in a secondary vdisk in a secondary system. • Replication snapshot. A special type of snapshot that preserves the state of data of a replication set's primary volume as it existed when the snapshot was created. For a primary volume, the replication process creates a replication snapshot on both the primary system and, when the replication of primary-volume data to the secondary volume is complete, on the secondary system. Replication snapshots are unmappable and are not counted toward a license limit, although they are counted toward the system's maximum number of volumes. A replication snapshot can be exported to a regular, licensed snapshot. • Replication image. A conceptual term for replication snapshots that have the same image ID in the primary and secondary systems. These synchronized snapshots contain identical data and can be used for disaster recovery. Replication process overview As a simplified overview of the remote-replication process, it can be configured to provide a single point-in-time replication of volume data or a periodic delta-update replication of volume data. The periodic-update process has multiple steps. At each step, matching snapshots are created: in the primary system, a replication snapshot is created of the primary volume's current data; this snapshot is then used to copy new (delta) data from the primary volume to the secondary volume; then in the secondary system, a matching snapshot is created for the updated secondary volume. This pair of matching snapshots establishes a replication sync point and these sync points are used to continue the replication process. The following figure illustrates three replication sets in use by two hosts: • The host in New York is mapped to and updates the Finance volume. This volume is replicated to the system in Munich. • The host in Munich is mapped to and updates the Sales and Engineering volumes. The Sales volume is replicated from System 2 to System 3 in the Munich data center. The Engineering volume is replicated from System 3 in Munich to System 1 in New York. About the Remote Snap replication feature 121